Moore’s law is the observation that the number of transistors in a dense integrated circuit doubles about every two years. The observation is named after Gordon Moore, the co-founder of Fairchild Semiconductor and who was the CEO of Intel, whose 1965 paper described a doubling every year in the number of components per integrated circuit, and projected this rate of growth would continue for at least another decade.

Most forecasters, including Gordon Moore, expect Moore’s law will end by around 2025.

In April 2005, Gordon Moore stated in an interview that the projection cannot be sustained indefinitely:

“It can’t continue forever. The nature of exponentials is that you push them out and eventually disaster happens.”

For years hardware designers have come up with ever more sophisticated memory handling and acceleration techniques, such as CPU caches, but these cannot work optimally without some help from the programmer.

Years of exponential increase in CPU frequency meant free speed-up for existing software but also have caused and worsened the processor-memory bottleneck. This means to achieve the highest possible performance, software has to be restructured and tuned to the memory hierarchy.

In 2016 the International Technology Road map for Semiconductors, after using Moore’s Law to drive the industry since 1998, produced its final road map.

With the end of Moore’s law, the increase in mobile and embedded device usage and the InternetOfThings, it’s critical to have software that is not only correct, but optimized for performance and efficiency.

At its most basic level, software optimization is beneficial and should always be applied.

If you don’t optimize your code, but your competition does, then they’ll have a faster and more efficient solution, independent of hardware improvements.

- Hardware performance improvement expectations almost always exceeds reality

- PC disk performance has remained relatively stable for the past 5 years

- Memory technology has been driven more by quantity than performance

- Hardware improvements often only target optimal software solutions …

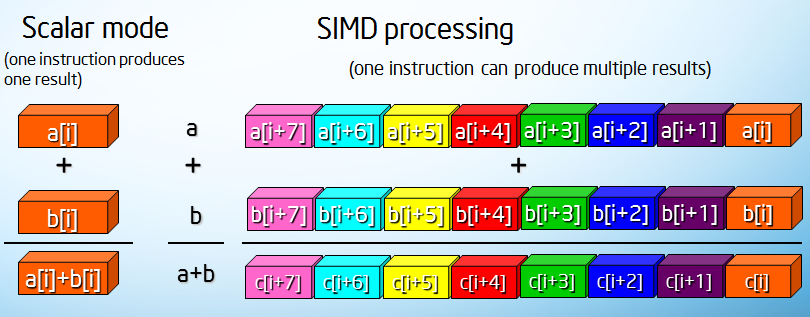

For example, Intel has made some tremendous improvements in processors with their Streaming SIMD Extensions (SSE) which is a single instruction, multiple data (SIMD) instruction set extension to the x86 architecture, that can greatly increase performance when exactly the same operations are to be performed on multiple data objects. But with all x86 instruction set extensions, it is up to the application programmer to test and detect their existence and proper operation.

For example, Intel has made some tremendous improvements in processors with their Streaming SIMD Extensions (SSE) which is a single instruction, multiple data (SIMD) instruction set extension to the x86 architecture, that can greatly increase performance when exactly the same operations are to be performed on multiple data objects. But with all x86 instruction set extensions, it is up to the application programmer to test and detect their existence and proper operation.

Nowadays, college courses in software development often focus on the importance of agile software development methods, object-oriented programming, modularity, reusability, and systematization of the software development process. These requirements are often in conflict with the requirements of optimizing software for speed or efficiency.

Today, it’s common for software development teachers to recommend that no function or method should be longer than a few lines. A few decades ago, the recommendation was the opposite: “Don’t put something in a separate function, if it’s only called once”.

The reasons for this shift in software development methodology is that computers have become more powerful, software projects have become larger and more complex, and there is more focus on the costs of software development, rather than program performance or resource usage. Which turns out costing more because of lost time, lost customers and the expense of using more resources.

The high priority of structured software development and the low priority of software efficiency is reflected in the choice of programming language and interface frameworks. This directly affects the end user who has to invest in more powerful computers to keep up with the bigger software packages and who is still frustrated by unacceptably long response times, even for simple tasks.

In today’s fast paced, competitive world, software performance is just as important to customers as the features it provides.

It may not be enough to accurately convert the cool idea in your head into lines of code. It may not even be enough to analyze the code for defects until it runs correctly all the time. Your application may be still too slow to provide the user experience required for a viable product.

To make software faster and more efficient, it is often necessary to compromise on some elements of a particular software development methodology.

The goal of software optimization is to improve the behaviour of a correct program so that it also meets the customer’s need for speed, throughput and resource usage.

Software optimization is just as important to the development process as implementing features is. Poor performance is the same kind of problem for end users as bugs and missing features.

It can be difficult for some software developers to reason about the effects of individual programming decisions on the overall performance of a large program.

Practically all complete software application programs contain significant opportunities for optimization. Even code produced by experienced software development teams with plenty of time can often be sped up by a factor of 30%–100%. For more rushed or less experienced teams, we have seen performance improvements of 3 to 10 times.

The selection of better algorithms or data structures can be the difference between whether a feature can be deployed or is unfeasibly slow.

It is important to identify and isolate the most critical parts of a program and concentrate optimization efforts on those particular parts.

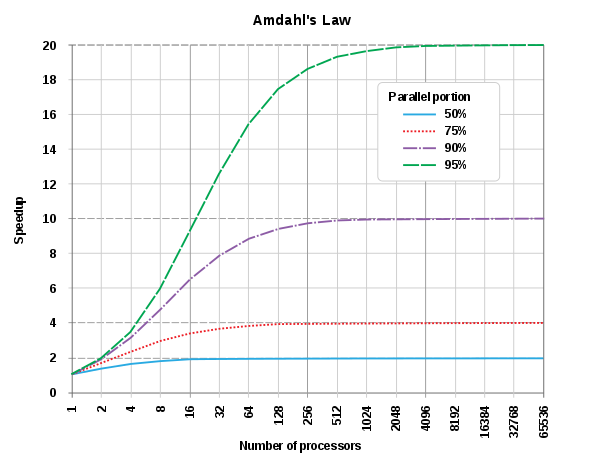

Amdahl’s Law is a formula which gives the theoretical speedup in latency of the execution of a task at fixed workload that can be expected of a system whose resources are improved.

When deciding whether to optimize a specific part of the program, Amdahl’s Law should always be considered: the impact on the overall program depends very much on how much time is actually spent in that specific part, which is not always clear from looking at the code without a performance analysis.

A better approach is therefore to design first, code from the design and then profile/benchmark the resulting code to see which parts should be optimized. A simple and elegant design is often easier to optimize at this stage, and profiling may reveal unexpected performance problems that would not have been addressed by premature optimization.

In practice, it is important to keep performance goals in mind when first designing software. Existing software can be made more valuable by re-writing perfomance critical parts.

Sometimes, a critical part of the program can be re-written in a different programming language that gives more direct access to the underlying machine. For example, it is common for very high-level languages like Python to have modules written in C or C++ for greater speed. Programs already written in C++ can have modules written in Assembly.

Rewriting sections “pays off” in these circumstances because of a general “rule of thumb” known as the 90/10 law, which states that 90% of the time is spent in 10% of the code, and only 10% of the time in the remaining 90% of the code. So, putting intellectual effort into optimizing just a small part of the program can have a huge effect on the overall speed — if the correct part(s) can be located.

At the highest level, the software design may be optimized to make best use of the available resources, given goals, constraints, and expected use/load.

The architectural design of a system overwhelmingly affects its performance. For example, a system that is network latency-bound (where network latency is the main constraint on overall performance) would be optimized to minimize network trips, ideally making a single request (or no requests, as in a push protocol) rather than multiple roundtrips.

As performance is part of the software specification – a program that is unusably slow is not fit for purpose.

For example a video game with 60 frames-per-second is acceptable, but 6 frames-per-second is unacceptably choppy.

Software performance should be considered from the start. To ensure that the final system is able to deliver sufficient performance.

Early prototypes should have roughly acceptable performance, so there will be confidence that the final system will (with optimization) be able to achieve acceptable performance requirements. Optimization is sometimes deferred in the belief that optimization can always be done later, resulting in prototype systems that are far too slow – often by an order of magnitude or more – and systems that ultimately are failures because architecturally they cannot achieve their performance goals.

Choosing a programming language

Performance bottlenecks can be due to programming language limitations rather than algorithms or data structures used in the program.

The programming language you choose for the development of your software application should be based on the specific functionality to be achieved.

Programming languages capable of working at a lower level are well suited for optimizing execution speed and program size, while slower higher level languages may be better for the quick development of user interfaces, prototypes and test functions.

The highest level of application efficiency is obtained with compiled binary executables. For example C, C++ and Assembly language.

Interpreted programming languages, such as JavaScript, PHP, Python, and classic ASP are interpreted line by line when run. Interpreted code is very inefficient because the code must be parsed. This creates a lot of overhead, typically slowing down execution by a factor of 20-1000.

The choice of programming language is sometimes a compromise between efficiency, portability, and development time. Though, when efficiency or performance is important, interpreted languages are unacceptable.

Programming languages that are based on intermediate code and just-in-time compilation such as such as C#, Visual Basic .NET and the best Java implementations may be a viable compromise when portability and ease of development are more important than speed. However, these languages have the disadvantage of very large runtime frameworks that must be loaded every time the program is run.

The time it takes to load the framework and compile the program is often much more than the time it takes to execute the program; and the runtime framework may use more resources than the program itself when running. Programs using such a framework sometimes have unacceptably long response times even for simple tasks.

Clearly, the fastest execution is obtained with a fully compiled code.

Our preference for the C++ programming language is for several reasons:

- C++ is supported by some very good compilers and well designed function libraries, that are able to produce optimized code without any additional work.

- C++ is an advanced high-level language with a lot of features rarely found in other languages.

- The C++ language includes the C language as a subset, providing easy access to low-level optimizations.

- Most C++ compilers are able to generate an assembly language output, which is useful for checking how well the compiler optimizes a piece of code.

- Software development in C++ is very efficient, thanks to powerful, well integrated tools, like Microsoft’s Visual Studio.

- The C++ Standard Template Library (STL) contains a large number of optimized algorithms to perform common activities such as searching and sorting.

Additionally, most C++ compilers allow assembly-like intrinsic functions, inline assembly, or easy linking to assembly language modules when the highest level of optimization is needed.

The C++ programming language is also portable in the sense that C++ compilers exist for all major platforms.

C++ is the programming language of choice when the optimization of performance has high priority. The gain in performance over other programming languages can be quite substantial. This gain in performance can easily justify a possible minor increase in development time when performance is important to the end user.

One of the main reasons for preferring C++ over simpler, higher-level programming languages is that C++ facilitates the construction of complex software in a way that makes more efficient use of hardware resources than when using other languages.

The C++ language does not guarantee efficient code automatically, but provides the tools that enable experienced programmers to produce highly efficient software.

Sloppy C++ code may be no more efficient than higher-level implementations of the same algorithms, but a good C++ programmer with knowledge of the language can write software that is efficient from the start and then optimize the code further.

There may be cases where, for certain purposes, a high-level architecture based on intermediate code is required but part of the code still needs careful optimization. In such cases a mixed implementation could be a viable solution.

Much of the code in a typical software project goes into the development of the user interface. Applications that are not computationally intensive may very well spend more CPU time on the user interface than on the primary, essential task of the program.

The most critical parts of the code can be implemented in compiled C++ and the rest of the code, including user interface, can be implemented in the high level framework. The optimized part of the code can possibly be compiled as a dynamic link library (DLL) which is called by the rest of the code.

Though, this is not an optimal solution because the high level framework still consumes a lot of resources, and the transitions between the two kinds of code gives an extra overhead which consumes CPU time. But this solution can still give a considerable improvement in performance if the time-critical part of the code can be completely contained in a DLL.

Using a profiler to find hot spots

You have to define the essential parts of the program before optimizing anything. Some programs spend more than 90% of the time performing mathematical calculations in the innermost loop. For other systems, more than 90% of the time is spent on reading and writing data files, while less than 10% is spent on actually doing something. It’s important to optimize the parts of the code that matters most rather than the parts of the code that use only a small fraction of the total time. Optimizing less critical parts of the code will not only be a waste of time, it also makes the code less clear and more difficult to debug and maintain.

Most compiler packages include a profiler that can tell how many times each function is called and how much time it uses.

When the most time-consuming parts of the program have been found, then it is important to focus the optimization efforts on the time consuming parts only.

Performance and usability

A better performing software product is one that saves time for the user. Time is a precious resource for many computer users and much time is wasted on software that is slow, difficult to use, incompatible or error prone. All these problems are usability issues, and we believe that software performance should be seen in the broader perspective of usability.

Choosing the optimal algorithm

When you would like to optimize a piece of CPU-intensive software the first thing to do is to find the right algorithm. For tasks such as sorting, searching, and mathematical calculations the choice of algorithm is critical. In these instances, selecting the best algorithm will give you much more in terms of performance than optimizing the first algorithm that comes to mind.

In some cases, you would need to test several different algorithms to find the one that works best on a standard collection of test data. That being said, please be advised against overkill. If a simple algorithm can do the job fast enough, don’t use a complex and complicated algorithm. Some programmers for example use a hash table for even the smallest data set.

A hash table can significantly increase search times for very large databases, but for lists that are so small that a binary search or even a linear search is quick enough, there is no need to use it. A hash table increases system size as well as data file size. Unless the bottleneck is file access or cache access rather than CPU time, this may actually reduce speed. Another disadvantage of complicated algorithms is that it makes program development more error prone and expensive.

Software development process

There are varying opinions and a long standing debate about which software development method to use. This is often based on a particular application’s requirements. Instead of recommending any specific software development process, there are a few items to consider about how the development process can influence the performance of the final product.

In order to determine which resources are most important, it is essential to do a detailed review of the data structure, data flow, and algorithms during the planning process. But, in the early planning stage, there may be so many unknown factors that it’s not easy to get a thorough overview of the issue.

In complicated cases, a full understanding of the performance bottlenecks only comes at a late stage in the software development process. In this case, you may view the software development work as a learning process where the main feedback comes from testing and from programming problems. Here, you should be prepared for several iterations of redesign.

Some software development processes have an excessive adherence to prescribed forms that requires several layers of abstraction in the logical architecture of the software. You should be aware that there are inherent performance costs to such a formalism. The splitting of software into an excessive number of separate layers of abstraction is a common cause of reduced performance.

Conclusion

Software optimization should be considered from the start of a software development project. Existing software can be significantly improved by optimization.

Optimized software can be the difference between having a viable, efficient application that uses less resources and having no product at all or an application that continually tests your customer’s patience and consumes your investment on resources that could be better utilized.

In terms of keeping your customers happy and using your resources efficiently, optimized software will provide better results.

Contact us to hire experienced C++ programmers, that can help you to make the most out of your software application.