It’s no secret that common, everyday software defects cause the majority of software vulnerabilities. This is in part because there is level of expertise expected from software developers that is not always present.

Even though the primary causes of security issues in software are because of defects in the software, defective software is still commonplace.

Often companies will try to save money by using outsourced software development, resulting in costs, in terms productivity, reliability, lost customers, maintainability, and in the case of security breaches, fines and lawsuits, that far exceed the cost of having software developed by experienced, security aware software developers.

There is a huge difference between an experienced software developer, that has honed their craft for decades, and the multitude of individuals calling themselves software developers.

Software Security Flaws

A software defect that poses a potential security risk.

Software engineering has been concerned with the elimination of software defects for a long time. A software defect is the inclusion of a human error into the software.

Software defects can happen at any point in the software development life cycle. For example, a defect in deployed software can come from a misstated, misrepresented or misinterpreted requirement.

Not all software defects pose a security risk. Those that do are security flaws. If we accept that a security flaw is a software defect, then we must also accept that by eliminating software defects, we can eliminate security flaws. This premise underlies the relationship between software engineering and secure programming.

An increase in software quality, which can be measured by defects per thousand lines of code, would likely also result in increased security.

Software Vulnerabilities

Not all security flaws lead to vulnerabilities. However, a security flaw can cause a program to be vulnerable to attack when the program’s input data (for example, command-line parameters) crosses a security boundary en route to the program. This may occur when a program containing a security flaw is installed with execution privileges greater than those of the person running the program or is used by a network service where the program’s input data arrives via the network connection.

Vulnerabilities in Existing Software

In addition to the defects and security vulnerabilities found in legacy software, the vast majority of new software developed today relies on previously developed software components to work. For example, programs often depend on runtime software libraries that are packaged with the compiler or operating system.

Programs also commonly make use of third-party components or other existing off-the-shelf software. One of the unadvertised consequences of using off-the-shelf software is that, even if a software developer writes flawless code, the application may still be vulnerable due to a security flaw in one of these components.

The most widely used operating systems, Windows, Linux and macOS, have one to two defects per thousand lines of code and contain several million lines of code, therefore they typically have thousands of defects.

Application software, while not as large, has a similar number of defects per thousand lines of code. While not every defect is a security concern, if only 1 or 2 percent lead to security vulnerabilities, the risk is substantial.

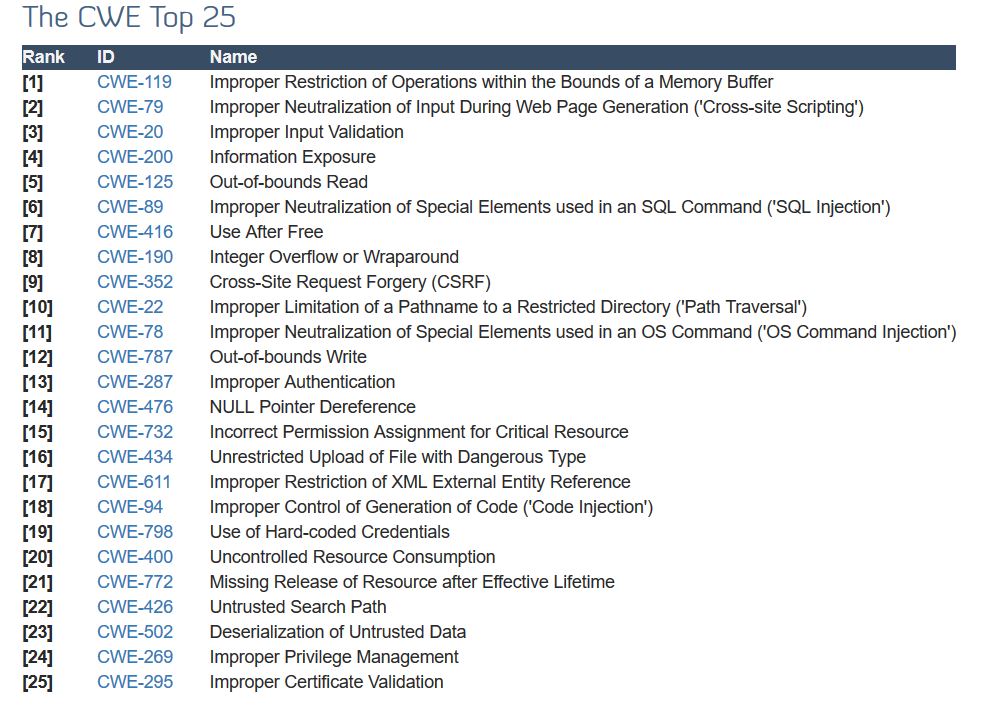

Alan Paller, director of research at the SANS Institute, expressed frustration that “everything on the SANS Institute Top 25 Software Most Dangerous Software Errors vulnerability list is a result of poor coding, testing and sloppy software engineering”. These are not new problems, as anyone might easily assume. Technical solutions exist for all of them, but they are simply not implemented.

CWE Top 25 Common Weakness Enumeration (CWE)1

The results are that numerous defects exist in delivered software, some of which lead to vulnerabilities.

Software Components with Known Vulnerabilities

The National Institute of Standards and Technology (NIST) maintains a National Vulnerability Database (NVD) 2 which is a searchable list of found vulnerabilities.

After being informed of vulnerabilities, the software developers may respond by patching. However, patches are often so numerous that system administrators cannot keep up with their installation. Often the patches themselves contain security defects. The strategy of reacting to security defects does not work. A strategy of prevention and early security defect removal is needed.

Having software developers that understand the sources of vulnerabilities and that have learned to program securely are essential to protecting your business from attack.

Reducing security defects requires a disciplined software engineering approach based on sound design principles and effective quality management practices. See How to Mitigate the Risk of Software Vulnerabilities

For example, certain programming languages allow direct addressing of memory locations and do not automatically ensure that these locations are valid for the memory buffer that is being referenced. This can cause read or write operations to be performed on memory locations that may be associated with other variables, data structures, or internal program data.

Some functions in the C standard library 3 have been notorious for having buffer overflow vulnerabilities and generally encouraging buggy programming ever since their adoption. The most criticized items are:

- string-manipulation routines, including strcpy() and strcat(), for lack of bounds checking and possible buffer overflows if the bounds aren’t checked manually;

- string routines in general, for side-effects, encouraging irresponsible buffer usage, not always guaranteeing valid null-terminated output, linear length calculation;

- printf() family of routines, for spoiling the execution stack when the format string doesn’t match the arguments given. This fundamental flaw created an entire class of attacks: format string attacks;

- gets() and scanf() family of I/O routines, for lack of (either any or easy) input length checking.

As a result, an attacker may be able to execute arbitrary code, alter the intended control flow, read sensitive information, or cause the system to crash.

Except in extreme cases with gets(), all the above security vulnerabilities can be avoided by using auxiliary code to perform memory management, bounds checking, input checking, etc. This is often done in the form of wrappers that make standard library functions safer and easier to use.

Because many of these problems have been known for some time, there are now fixed versions of these functions in many software libraries.

It may seem that it is now safe to use these functions because the problem has been corrected. But is it really? Modern operating systems typically support dynamically linked libraries or shared libraries. In this case, the library code is not statically linked with the executable but is found in the environment in which the program is installed.

So, if our hypothetical application is designed to work with a particular library that is installed in an environment in which an older insecure version of the function is installed, the program will be susceptible to the vulnerabilities.

At least 53% of websites on the web using PHP, which is implemented in the C programming language, are still on discontinued version 5.6 or older. Because of the popularity of PHP, that means more than half of the websites in the world run an implementation of a programming language that is no longer supported by their designers.

One solution is for your application to be statically linked with safe libraries. This approach enables you to be sure of the library implementation you’re using. However, this approach does have the downside of creating larger executable images on disk and in memory. Also, it means that your application is not able to take advantage of newer libraries that may repair previously unknown flaws (security and otherwise).

Another solution is to ensure that the values of inputs passed to external functions remain within ranges known to be safe for all existing implementations of those functions.

Software Flaw Mitigation

There are many approaches at varying levels of maturity that show great promise for reducing the number of vulnerabilities in software.

Many tools, techniques, and processes that are designed to eliminate software defects also can be used to eliminate security flaws. However, many security flaws go undetected because traditional software development methods seldom assume the existence of attackers.

For example, software testing will normally validate that an application works correctly for a reasonable range of user inputs. Unfortunately, attackers are rarely reasonable and will spend an inordinate amount of time devising inputs that will break a system.

To identify and prioritize security flaws according to the risk they pose, existing tools and methods must be extended or supplemented to assume the existence of an attacker.

Mitigation Methods, techniques, processes and tools that can prevent or limit exploits against vulnerabilities.

Software flaw mitigation is a solution or workaround for a software flaw that can be applied to prevent exploitation of a vulnerability.

At the source code level, mitigations can be as simple as replacing an unbounded string copy operation with a bounded one.

At a system or network level, a mitigation might involve turning off a port or filtering traffic to prevent an attacker from accessing a vulnerability.

Mitigations are also called countermeasures or avoidance strategies. The preferred way to eliminate security flaws is to find and fix the actual software defect. However, in some cases it can be more cost-effective to mitigate the security flaw by preventing malicious inputs from reaching the defect.

Generally, this approach is less desirable because it requires the developer to understand and protect the code against all manner of attacks as well as to identify and protect all paths in the code that lead to the defect.

Stackbuffer overrun detection

A well-known example of a software vulnerability is a stack-based buffer overrun. This type of vulnerability is typically exploited by overwriting critical data used to execute code after a function has completed.

Over the years, a number of control-flow integrity schemes have been developed to inhibit malicious stack buffer overflow exploitation. These may usually be classified into three categories:

- Detect that a stack buffer overflow has occurred and thus prevent redirection of the instruction pointer to malicious code.

- Prevent the execution of malicious code from the stack without directly detecting the stack buffer overflow.

- Randomize the memory space such that finding executable code becomes unreliable.

The best defense against stack-based overflow attacks is the use of secure coding practices, mostly by stopping the use of functions that allow for unbounded memory access and carefully calculating memory access to prevent attackers from modifying adjacent values in memory.

If attackers can only access the memory of the variable they intend to change, they can’t affect code execution beyond the expectations of the developer.

Unfortunately, there are thousands of existing legacy programs that have unsafe, unbounded functions to access memory, and re-coding all of them to meet secure coding practices is simply not feasible. For these legacy programs, operating system manufacturers have implemented several ways to prevent poor coding practices that result in arbitrary code execution.

For example, since Microsoft Visual C++ 2002, the Microsoft Visual C++ compiler has included support for the code Generation Security (/GS) compiler switch, which when enabled, introduces an additional security check designed to help mitigate the exploitation of stack-based buffer overrun.

This stack-based buffer overrun software flaw mitigation works by placing a random value (known as a cookie) prior to the critical data that an attacker would want to overwrite. This cookie is checked when the function completes to ensure it is equal to the expected value. If a mismatch exists, it is assumed that corruption occurred and the program is safely terminated.

This simple concept demonstrates how a secret value (in this example, the cookie) can be used to break certain exploitation techniques, by detecting corruption at critical points in the program. The fact that the value is secret introduces a knowledge deficit that is generally difficult for the attacker to overcome.

Vulnerabilities can also be addressed in operational software by isolating the vulnerability or preventing malicious inputs from reaching the vulnerable code. Of course, operationally addressing vulnerabilities significantly increases the cost of mitigation because the cost is pushed out from the software developer to system administrators and end users. Additionally, because the mitigation must be successfully implemented by host system administrators or users, there is increased risk that vulnerabilities will not be properly addressed in all cases.

Secure Coding Standards

An essential element of secure software is well-documented and enforceable coding standards. Coding standards encourage programmers to follow a uniform set of rules and guidelines determined by the requirements of the project and organization rather than by the programmer’s familiarity or preference.

CERT coordinates the development of secure coding standards by security researchers, language experts, and software developers using a wiki-based community process. CERT’s secure coding standards 4 have been adopted by companies such as Cisco and Oracle.

The CERT solution is to combine static and dynamic analysis to handle legacy code with low overhead. These methods can be used to eliminate several important classes of vulnerabilities, including writing outside the bounds of an object (for example, buffer overflow), reading outside the bounds of an object, and arbitrary reads/writes (wild-pointers). The buffer overflow problem, for example, is solved by static analysis for issues that can be resolved at compile and link time and by dynamic analysis using highly optimized code sequences for issues that can be resolved only at runtime.

The use of secure coding standards defines a set of requirements against which the source code can be evaluated for conformance. Secure coding standards provide a metric for evaluating and contrasting software security, safety, reliability, and related properties. Faithful application of secure coding standards can eliminate the introduction of known source-code-related vulnerabilities.

Robert C. Seacord 5 leads the secure coding initiative in the CERT Program at the Software Engineering Institute (SEI) in Pittsburgh, PA

Secure Software Development Principles

Although software development principles alone are not sufficient for secure software development, they can help guide secure software development practices. Some of the earliest secure software development principles were proposed by Jerome Saltzer and Michael Schroeder in their 1975 article “The Protection of Information in Computer Systems” 6, that from their experience are important for the design of secure software systems. These 10 principles apply today as well and are repeated verbatim here.

Although subsequent work has built on these basic security principles, the essence remains the same. The result is that these principles have withstood the test of time.

- Economy of mechanism: Keep the design as simple and small as possible.

- Fail-safe defaults: Base access decisions on permission rather than exclusion.

- Complete mediation: Every access to every object must be checked for authority.

- Open design: The design should not be secret.

- Separation of privilege: Where feasible, a protection mechanism that requires two keys to unlock it is more robust and flexible than one that allows access to the presenter of only a single key.

- Least privilege: Every program and every user of the system should operate using the least set of privileges necessary to complete the job.

- Least common mechanism: Minimize the amount of mechanisms common to more than one user and depended on by all users.

- Psychological acceptability: It is essential that the human interface be designed for ease of use, so that users routinely and automatically apply the protection mechanisms correctly.

- Work factor: Compare the cost of circumventing the mechanism with the resources of a potential attacker.

- Compromise recording: It is sometimes suggested that mechanisms that reliably record that a compromise of information has occurred can be used in place of more elaborate mechanisms that completely prevent loss.

Obviously, these principles do not represent absolute rules, they serve best as warnings. If some part of a design violates a principle, the violation is a symptom of potential trouble, and the design should be carefully reviewed to be sure that the trouble has been accounted for or is unimportant.

Conclusion

Higher quality software, which can be measured by the number of defects, is not only much more reliable and efficient, it greatly improves your organization’s and customer’s security.

Improving your software is an investment that pays for itself many times over.

Contact us for an evaluation of your software’s security.